Containers are taking over and have become one of the most promising methods for developing applications as they provide the end-to-end packages necessary to run your applications. Kubernetes is undoubtedly becoming a popular choice amongst developers as it helps the current tech landscape in various ways. The dependency quotient of Kubernetes in large organizations is becoming clear.

In the history of open-source software, Kubernetes has gained popularity as one of the fastest-growing projects. Only second to Linux in the market, Kubernetes is expected to increase at a compound annual growth rate i.e. CAGR of 23.4% by 2031. The same report by Statista also states that in the past few years, around 60% of companies have adopted Kubernetes. The primary reason behind this surge is that this platform works towards improving business operations.

Kubernetes, also popular as k8s or Kube is an open-source container orchestration platform that automates a range of manual processes involved in deploying, managing, and scaling containerized applications. The combination of containerization and Kubernetes is a powerful union that has emerged as a transformative technology to help businesses harness the power of this platform. To help our readers understand this technology better and make the most of it we have put together this blog to share vital information on the essentials of Kubernetes and its architecture in detail.

Why is it named “Kubernetes”?

Let’s understand how the name Kubernetes came from its origin.

The name Kubernetes comes from the Greek origin meaning helmsman or pilot. K8s as an abbreviation concludes with eight letters between the “K” and the “s”. To unfold more interesting details about Kubernetes, let’s take a quick look at the mindfully created logo.

Kubernetes and Docker – The “logo” connection

|

If we observe the Docker logo, we can see that it has a ship that holds containers. |

|

And in the Kubernetes logo, we can see that it’s a seven-spoked steering wheel or the helm. |

Initially, the projected name for Kubernetes was ‘Project 7’ but later it was renamed to Kubernetes. Hence, the Kubernetes logo has 7 cross lines in the wheel. Generally, to control the ship, we need a wheel, hence the Kubernetes wheel is strategically placed in the logo to represent its importance like how it is used to control the (Docker sheep) containers.

Why do we need Kubernetes?

In earlier days, software was developed in Monolithic architecture. In recent days, this monolithic architecture has been replaced with Microservices. In the microservices architecture world, each service gets converted into microservices and these are deployed on each server. However, the resource utilization by each microservice is weak. To overcome this, containers come into the picture, where we can run multiple containers on a single server. Each container runs a microservice achieving better resource utilization.

However, while deploying microservices on a container, it is essential to consider the points shared below:

- Containers cannot communicate with each other

- Though multiple containers can be installed or removed, autoscaling is not possible along with auto load balancing to manage resources.

- It is difficult to manage containers for each deployed software along with resource usage.

Here, Kubernetes comes into the picture for controlling the activities to create, scale, and manage multiple containers. Kubernetes helps to overcome the challenges encountered while working with containers and hence is called a container orchestration (management) platform/ tool. It is developed by Google and source code is in the Go language. Orchestration is nothing but the clustering of any number of containers running on a different network.

Getting the Kubernetes Glossary Right

Before we get deeper into understanding Kubernetes, let us understand its core concepts.

Containers

Containers are independent, and viable pieces of software that include everything such as code, libraries, and any other external dependencies necessary for execution. It ensures that what is running is identical, even when it is executed in different environments.

Clusters

A Kubernetes cluster consists of nodes that run containerized applications, packaging each app with its dependencies for lightweight and flexible deployment. Unlike virtual machines, containers share the operating system, allowing them to run across various environments—virtual, physical, cloud-based, or on-premises. This makes applications easier to develop, move, and manage. A Kubernetes cluster includes a master node and several worker nodes, which can be either physical machines or virtual ones, providing a robust and versatile platform for containerized applications.

Pods

Pods are the smallest deployable units in Kubernetes, consisting of one or more containers that share storage, network resources, and runtime specifications. Pods co-locate their containers, ensuring they run together in a shared context, like applications running on the same physical or virtual machine. This tight coupling models an application-specific “logical host,” making Pods an efficient way to manage containerized applications.

Services

In Kubernetes, a Service exposes a network application running in one or more Pods within your cluster. Services allow you to make Pods accessible on the network without altering your existing applications or using unfamiliar service discovery mechanisms. This makes it easy for clients to interact with both cloud-native and containerized legacy applications.

Namespaces

Namespaces in Kubernetes are a method to organize clusters into virtual sub-clusters and can be extremely helpful when a Kubernetes cluster is shared by different teams. There is no upper limit or lower limit for namespaces to be supported within a cluster. Every cluster logically separated from the other still can communicate effectively with each other. But it is important to remember that it is not possible to nest namespaces with each other.

Note: Any resource within Kubernetes comes with a default namespace or namespace created by the cluster operator.

Volumes

In Kubernetes, a volume is a directory with data accessible to containers within a pod, offering a way to connect ephemeral containers to persistent storage. A volume lasts if its pod exists. When the pod is deleted, the volume is also removed. If the pod is replaced with an identical one, a new, identical volume is created.

If you come from an industrial background, we recommend you download our thoughtfully crafted whitepaper on Kubernetes in Industrial Operations, which talks about the future of IIoT and how Kubernetes is transforming industrial operations.

Before we dive into understanding the architecture of Kubernetes, you can also read our blog that talks in detail about Kubernetes installation.

A high-level overview of Kubernetes Architecture

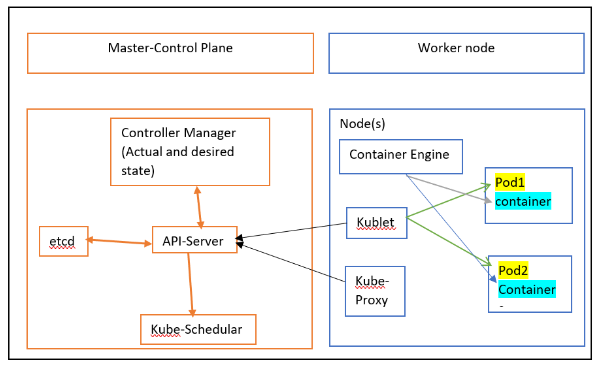

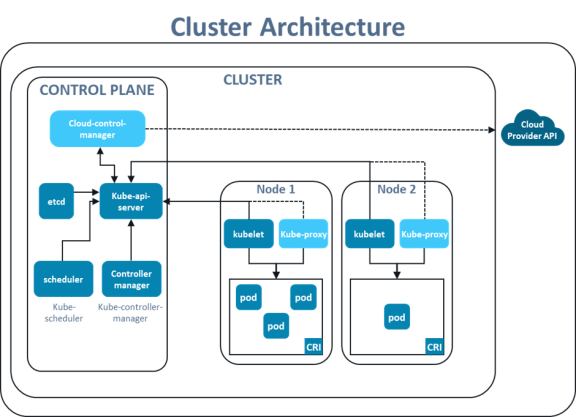

A Kubernetes follows Master-Slave or Server-client architecture. It has a Master (Control Plane) which acts as Server and Node(s) which acts as clients (Worker Nodes). Each node can have one or many PODs and a container is created inside the POD. We can create multiple containers in one POD; however, the recommendation is to create one container in one POD. The application gets deployed on the container. POD is a smaller unit over which K8s works, this means that the Master node always communicates with POD directly and not with the container.

Refer below high-level pictorial representation for the same:

The components of the Kubernetes architecture are divided into the Control plane and the Worker node.

Control Plane components:

- API-Server: The API-Server is the front-end component for the Kubernetes control plane which exposes Kubernetes API. This receives the request and forwards it to the appropriate component to accomplish the required action. The user applies ‘.yaml’ or ‘.json’ manifest to API Server. API-Server scale automatically as per load to handle all the requests.

- etcd: It is a kind of database that stores information metadata, the status of the cluster, PODs, and its containers in Key-value format. etcd is a consistent and highly available store. All the access to etcd is via API-Server. This is not the actual part of the Kubernetes components; however, this component is required to work with the Kubernetes cluster.

- Controller Manager: It balances the actual and desired state of containers inside the POD. The Controller Manager communicates with the API server and then the API-Server communicates with etcd to get the current state.

- Kube Scheduler: This is the action kind of component that takes care of the creation and management of PODs as per the action suggested by the Controller manager via API-server.

Worker Node components:

- Pod: This is the smallest atomic unit of Kubernetes. Kubernetes always works with PODs and not directly with the container as the container can be created using any tools like Docker, rkt (CoreOS Rocket), Podman, Hyper-V, and Windows container, etc. Once a POD fails, it is never repaired, and hence, a new POD needs to be created.

- Kube-Proxy: This component performs networking work. For example, assigning IP addresses to POD.

- Kublet: This is the agent running on the node and listening to Kubernetes master. This component keeps track of the container on PODs and shares information with the API server to update it in etcd and verify it with the Controller manager. Based upon the state, the Controller Manager performs necessary action with the help of the Kube Scheduler.

- Container Engine: This is not part of Kubernetes as this is provided by the set of platform-as-a-service products that use OS-level virtualization to deliver software in packages called containers. Depending on the platform or tool used for the container, it must be installed on Master too.

Key Features of the Kubernetes Architecture

Kubernetes helps to schedule, run, and manage isolated containers running on Physical, Virtual, or Cloud machines. Below are some features of Kubernetes:

1. Autoscaling (Vertical and Horizontal)

Primarily there are two types of scaling in Kubernetes. Horizontal scaling or Horizontal Pod Autoscaler (HPA) is a feature that can automatically add or release pod replicas. On the other hand, Vertical scaling or Vertical Pod Autoscaler (VPA) is a feature that automatically adjusts memory reservations in the CPU or the Central Processing Unit.

2. Load balancing

The LoadBalancer service type in Kubernetes provisions an external load balancer, usually provided by cloud providers, to distribute incoming traffic evenly across the service. These services act as traffic controllers, efficiently directing client requests to the appropriate nodes hosting your pods. While traditional load balancing is straightforward, balancing traffic between containers requires special handling. Load balancing in Kubernetes is key to maximizing availability and scalability by efficiently distributing network traffic among multiple backend services. There are various options for load-balancing external traffic to pods, each with unique benefits and trade-offs.

3. Fault tolerance for Node and POD failure

Kubernetes ensures fault tolerance for node and POD failures through several mechanisms. If a node fails, the control plane automatically redistributes the affected pods to healthy nodes within the cluster, maintaining service availability. Pods are designed to be ephemeral, meaning Kubernetes can recreate them on different nodes if they fail. Additionally, Kubernetes uses ReplicaSets to maintain the desired number of pod replicas, ensuring that even if some pods fail, others continue to run, providing resilience. By managing node and pod failures efficiently, Kubernetes ensures high availability and reliability for applications running in the cluster.

4. Platform independent (As it supports physical. Virtual and Cloud)

Kubernetes provides platform independence, supporting deployments on physical servers, virtual machines, and cloud environments. This flexibility allows seamless application management across various infrastructures. By abstracting underlying hardware, Kubernetes enables consistent deployment and easy workload migration between on-premises and cloud providers, facilitating hybrid and multi-cloud strategies. This uniform platform helps businesses avoid vendor lock-in and optimize resource utilization across their IT landscape.

5. Automated rollouts and rollbacks

Kubernetes facilitates automated rollouts and rollbacks, streamlining the process of updating applications. Automated rollouts progressively deploy changes, monitoring application health to ensure stability. If issues are detected, Kubernetes can automatically trigger a rollback to the last stable version, minimizing downtime and maintaining application reliability. This automation ensures seamless updates and quick recovery from potential issues.

6. Batch execution (One time, Sequential, Parallel)

Kubernetes supports batch execution, allowing jobs to run one-time, sequentially, or in parallel. This flexibility enables efficient processing of batch tasks, such as data processing or automated testing, ensuring optimal resource utilization and streamlined workflows for various applications.

7. Container Health monitoring

Health checks are a great way to help the K8s system understand if any instance of the application is operating or not. Other containers must not send requests or make any attempts to access a container in a bad state. By default, Kubernetes can restart the container as it crashes. On such an occasion it also starts diverting the traffic to the pod when notified that containers in the pod are back in action. However, deployments can be made more robust by customizing the health checks.

8. Self-healing

Kubernetes features self-healing capabilities, automatically detecting and addressing container failures. If a container crashes or becomes unresponsive, Kubernetes will redeploy it to restore its desired state and maintain application continuity. This automatic intervention ensures minimal disruption and consistent performance, reducing the need for manual intervention and enhancing the reliability and resilience of applications running in the cluster.

Cloud-based K8s services:

- Google K8s services are known as GKE

- Azure K8s services are known as AKS

- Amazon elastic K8s services are known as Amazon EKS

Important Facts to know about Kubernetes

- Kubernetes is fully open source.

- Kubernetes manages everything through code.

- Kubernetes enables cloud-native development.

- Kubernetes can be used on single machine for Development purpose

- Kubernetes can set up Persistent Volume for Stateful Application.

Parting Thoughts

Kubernetes comes out as an extremely strong platform to develop and run applications. Critical in the present-day tech landscape, it has a high capacity to ensure high availability, and scalability, making it critical for cloud-native practices. This blog was focused on decoding the basics and the need for Kubernetes along with its high-level architecture overview. K8S provides many benefits of learning as it releases a lot of effort from deployment, and it helps in auto-scaling, and intelligent utilization of available resources.

Calsoft being a technology-first company possesses over 2 decades of experience and expertise in various facets of technology solutions. Our Kubernetes engineering services have helped a myriad of businesses outdo their digital transformation journeys.