This blog is for those who are looking forward to getting started with Multus CNI, the blog covers an overview of CNI in Kubernetes, Multus CNI plugin, basic configuration along with a quick deployment scenario.

Kubernetes is one of the most popular Container orchestrations tools used in the industry. K8s makes deployment, management of applications in a cluster easy for developers and administrators. Kubernetes deploys multiple vital components such as API server, Scheduler, Controller, etc., that coordinate with each other to maintain the ultimate desired state of the cluster. In addition to these components, CNI (Container Networking Interface) is responsible for providing network connectivity within a Kubernetes cluster for the applications to talk to each other.

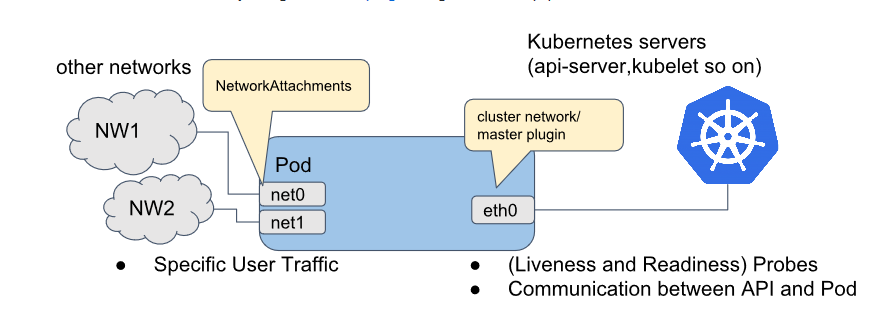

CNI plugins can be a choice depending on the need for the cluster. Popular CNI plugins used are Calico, Flannel, Canal, Weave Net. These CNI plugins, in general, allow the application’s traffic to pass through the host to a different application on the same host or different host within the cluster. Generally, we have a CNI configured at the time of deploying the cluster in this case called as the master CNI, this is usually the ‘eth0` interface in your pod, where Kubernetes service interacts with the pods. Now here comes the interesting part, in case there is a requirement of maintaining separate interfaces for monitoring, control-data plane separation or depending on the architecture requirements, there arises a need for attaching separate interfaces to your pods and this is where Multus comes in picture.

Multus enables pod to be deployed with multiple interfaces, here the additional interfaces can be of any choice from the provided list of Container networking plugins. Multus CNI can be deployed using the following ways

- Install via daemonset using the quickstart-guide.

- Download binaries from the release page

- By Docker image from Docker Hub

Below mentioned steps can be used to quickly spin a multus enabled environment, the prerequisite before setting up multus is a Kubernetes cluster.

- git clone https://github.com/intel/multus-cni.git && cd multus-cni

- cat ./images/multus-daemonset.yml | kubectl apply -f – (for Kubernetes 1.16+)

- cat ./images/multus-daemonset-pre-1.16.yml | kubectl apply -f – (Kubernetes versions < 1.16)

- cat ./images/multus-daemonset-pre-1.16.yml | kubectl apply -f –

If you are using the quickstart-guide, this will deploy a multus daemonset which will place the multus-binary in /opt/cni/bin and creates a new configuration file for multus by reading the first configuration file (alphabetically) from /etc/cni/net.d/. You can create your configuration file manually following setting up conf file.

After we have the multus daemonset up and running the next step is to configure additional interfaces for the pods. Irrespective of what type of interface is to be provisioned there are few mandatory configuration parameters to be passed in the config file present in /etc/cni/net.d/.

- cniVersion: Tells each CNI plugin which version is being used for compatibility purposes.

- type: Name of the binary to be used from /opt/cni/bin.

- additional: additional CNI parameters.

Depending on the type of interface (macvlan, bridge, loopback) you can specify respective configurations in the conf file, the following link can be used for reference example configurations.

Steps:

Below are the steps to configure a macvlan interface with a centos pod.

- Create a k8s cluster using kubeadm or kubespray. Configure master/default CNI.

- Follow the above mentioned steps to configure multus conf in /etc/cni/net.d/ and place multus binary in /opt/cni/bin. If using a quickstart guide this will be handled by the daemonset itself.

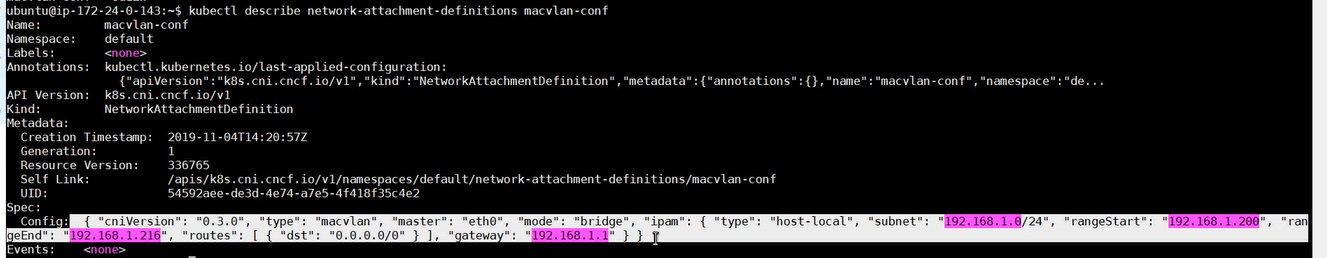

- Apply the manifest that configures the “macvlan” interface using CRD “NetworkAttachmentDefinition”.

- Create a sample centos pod by specifying the annotations for the interface to be used by the pod.

Command for creating “macvlan” interface

cat <<EOF | kubectl create -f -

apiVersion: "k8s.cni.cncf.io/v1"

kind: NetworkAttachmentDefinition

metadata:

name: macvlan-conf

spec:

config: '{

"cniVersion": "0.3.0",

"type": "macvlan",

"master": "eth0",

"mode": "bridge",

"ipam": {

"type": "host-local",

"subnet": "192.168.1.0/24",

"rangeStart": "192.168.1.200",

"rangeEnd": "192.168.1.216",

"routes": [

{ "dst": "0.0.0.0/0" }

],

"gateway": "192.168.1.1"

}

}'

EOF

Command for creating sample centos pod that is created with Master CNI and Minion CNI as Macvlan

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: samplepod

annotations:

k8s.v1.cni.cncf.io/networks: macvlan-conf

spec:

containers:

- name: samplepod

command: ["/bin/bash", "-c", "trap : TERM INT; sleep infinity & wait"]

image: dougbtv/centos-network

EOF

Use Cases :

- Separation of control, management and data/user network planes.

- Support different protocols or software stacks and different tuning and configuration requirements.

- Applying segregated network policies.

- Individual interface monitoring.

Ping demonstration on interfaces created using Multus CNI plugin:

Below are snapshots that demonstrate ping from “samplepod-2” to “samplepod-1” over “net1” interfaces created using the Multus plugin.

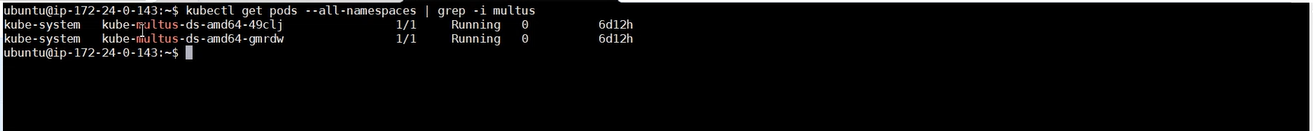

- Ensure pods deployed using kube-multus daemonset are up and running.

- Ensure that the “macvlan-conf” manifest mentioned above is applied with desired configurations as highlighted.

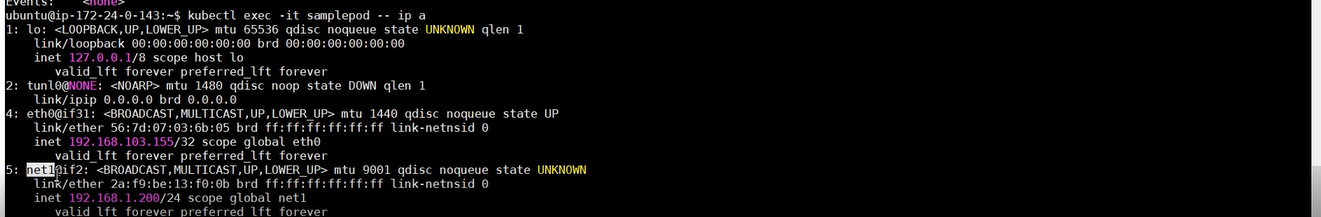

- Ensure pods have an additional interface attached by the name “net1”, below are the interfaces listed for “samplepod-1” where “eth0” is primary/master interface (running Calico as CNI) and “net1” is interface attached using Multus.

- Actual ping from “samplepod-2” to “samplepod-1” over interface added using Multus.

Multus CNI demo: demo-link

Hi Pranav,

I am working on 3 node cluster with calico as the master CNI. When I try to bring up weave as secondary IP using multus in one of the worker nodes, I am not able to ping the weave IP from master node or from any pod. But this works with a single node setup where I have only master. Any input on how to resolve this.

Thanks,

Shobana Jothi

Can we do a few pre-checks before we start with the actual RCA.

1. On each node make sure you have multus-conf file in dir /etc/cni/net.d directory prefixed with 00 as kubelet honors the first configuration file it gets alphabetically.

2. You have the cni binaries on each node in /opt/cni/bin

3. Is your multus, weave and calico daemonset running as intended ?

4. Also can you let me know what was your approach while creating the setup and steps you did in order so we can troubleshoot accordingly.