What’s the problems in infrastructure and why to address it –

It has been found extremely challenging to tune the infrastructure optimally while the quest for faster, cheaper and better infrastructure depends on multiple key performance indicators (KPIs). The illusion of capacity being infinite based on virtualization and hidden to workloads does not really address the constraints of existing and evolving infrastructure elements in compute, network and storage domains. To overcome the pains of this aspect the constraints have to be based on what Infrastructure is available on the Rack and shelf or on the network paths and storage elements in a given domain (can be cross-domain as long as there is a sharing arrangement and means to share it.)

Thus, the first part of the problem is to state what is the workload.

Workloads are applications and functions that are executed with real-time constraints over any given infrastructure. Workload types are AI, ML, DL, MEC, NFVi, IoT, 5G, etc. The workload can have typical profiles like ML Profile for speech or image recognition. There are few typical workloads defining parameters like Processing Cores / Clock speeds, Memory BUS Bandwidth, Memory Hierarchy, Network throughput/latency, IO-MMU transfer bandwidth, and IO operations per seconds (IOPs) that need optimization to effectively use the Infrastructure at hand.

Firstly, in our industry most users don’t have the right information for planning for their infrastructure and secondly, we are not using it intelligent or efficient enough to run those workloads efficiently. The need for infrastructure is changing every day for every industry or domain e.g. Edge needs some kind of infrastructure vs AI/ML/BigData requires the different level intensity of infrastructure. Although we do define reference architectures for every workload, still we fail to match the need of application programs effectively as apps requirements fluctuate over time and traffic peaks and demands more compute power, some need more memory, IO, etc. How a user can understand the need of application and configure the infrastructure intelligently in some way to achieve 80% and rest we possibly can improve using AB testing as done for Video streaming workload by NetFlix.

How to find a solution and what approach Medhavi provides in multi-component infrastructure –

The problem is we have so a variety of infrastructure providers and each defines the best suitable option for workloads that’s applicable to their configurations and it costs end users more. This problem exists for decades and the process involved is complex, manual and not automated. We have sometimes overprovisioned cores in systems, but they are not utilized, or we do not have visibility on what kind of devices are accessing these back end systems and what kind of devices we need to run the workload to execute workload faster and that’s where Medhavi comes into the picture. The idea here is Medhavi helps provide best-known configurations recommended for workloads and based on availing those infrastructures and use the compute workload manager to configure, execute and time them for best results. The additional component is to organize an AB testing on live workloads to improve the performance.

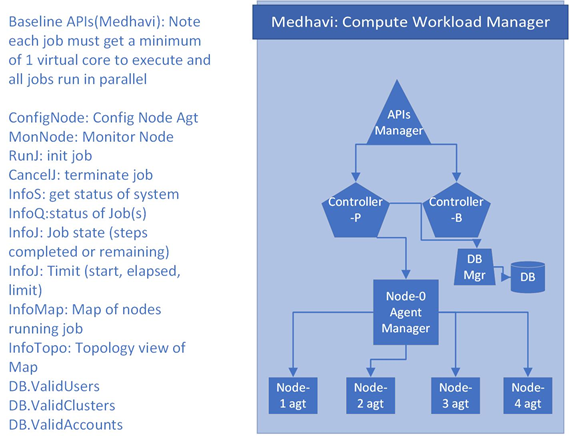

The meaning of “Medhavi” is “Intelligent” a Sanskrit language term. Medhavi architecture evolves around simple compute workload managers and a simple concept of jobs how they are placed and scheduled using Open Infrastructure is being attempted. While requirements evolve following is the baseline architecture.

The multiple modules of Medhavi are API Controller, Device Engine Agent Manager, Engine Agent, Cluster Engine, ML feedback Engine, etc. Each of these engines is a different module under Medhavi which will provide different feature sets. All these engines are continuously communicating with each other via a message queue.

Currently, PoC is attempting to use the Kubernetes cluster API to evaluate intelligence at the cluster level with the best-known configuration. Once the Medhavi team is able to set the AB testing for the given workload we will have a better understanding of what and how the API will evolve and this is an attempt to innovate on capturing and mapping intelligence from workload to emerging devices. We will talk about device requirements and emerging agents along with how it is being conceived in future blogs.

Also, we will be presenting, showcasing a demo of PoC and releasing Medhavi as an open-source in an upcoming Open Infra Summit in Shanghai 2019. My co-presenters are Prakash Ramchandra (Dell, Principal Architect – Cloud and Comm. Solutions), Sujata Tibrewala (Intel, Networking Technology Evangelist) & Jayanthi Gokhale (Intellysys ASP).